WaveNet

Abstract

This is a clone of Chainer-Examples-WaveNet and an experiment on Google Colaboratory.

Details of Operation

Please see the document "Synthesize_Human_Speech_with_WaveNet" in the docs folder. That's a softcopy of web of chainer-colab-notebook, wavenet in the reference.

Experiment on Google Colaboratory

Chainer-colab-notebook, Synthesize Human Speech with WaveNet, using CSTR VCTK Corpus.

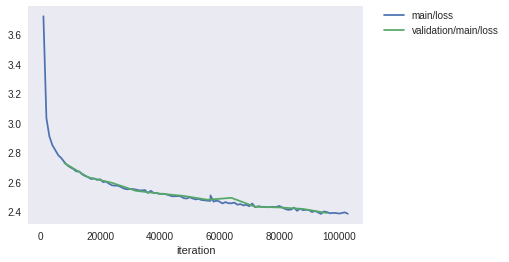

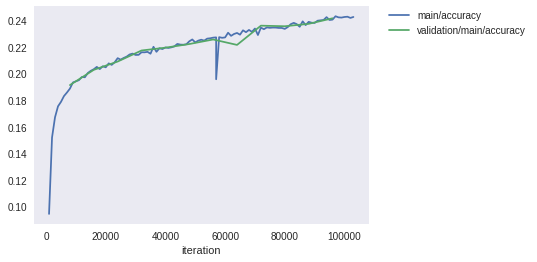

Following is Loss and Accuray vs iteration, around 20 hours computation, 12 epoch.

At iteration 57000, there is unusual drop shape, it causes resume re-start, due to Colaboratory time limit.

Accuracy is still too low (loss is still high), if without conditioning, waveform generation will fail.

Samples

In the samples folder, there are original and generated wav files, and model file (a snapshot).

Suffix of snapshot_iter shows iteration number.

Original wav are some wav of Pannous, english digits. (Please see reference link.)

When you generate using this model file,

You should specify --n_loop 2 as generate.py arrangement, due to Chainer-colab-notebook wavenet n_loop is 2.

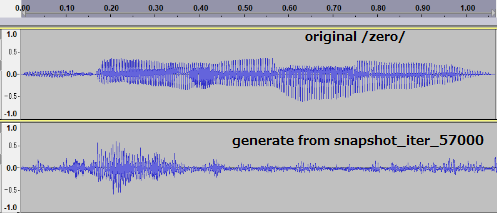

At this iteration 57000 (7 epoch), generated waveform is still dirty than original human speech waveform.

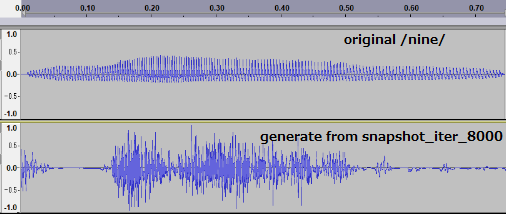

Athough generated waveform from iteration only 8000 (1 epoch), it can hear some reverberation of utterance /nine/(=digit 9).

It's may conditoning trick !?

Reference

- chainer-colab-notebook, Synthesize Human Speech with WaveNet

- chainer-examples-wavnet

- CSTR VCTK Corpus

- wav of Pannous, Description

- receptive field width, calculation method, by musyoku